Moderation

Last updated: 20 May 2025

How we think about moderation

At Mindjoy, we believe that young people deserve safe spaces to learn, explore, and create. Our approach to moderation is grounded in the principle of protecting student wellbeing while enabling rich learning experiences. Rather than relying solely on blocklists or rigid filters, we’ve designed a system that is transparent, explainable, and responsive – one that helps educators and students understand why something isn’t appropriate in a learning context and gives them the tools to respond appropriately.

Safety isn't just about catching harmful content. It’s also about building trust – making sure that students, teachers, and parents know that we’re paying attention, and that when difficult or sensitive topics arise, we handle them with care. An important aspect of this is giving institutions the ability to make their own choices regarding moderation that align with their values, ethos and perspective.

Continue reading if you’d like to find out more about how our moderation system works.

System Overview

Moderation applies to various user inputs on the Mindjoy platform. Since Mindjoy enables multimodal learning through text, images and audio, we have systems in place to ensure safety across each of these modalities. To help ensure that our system is both explainable and testable, moderation settings will either be global (ie. for all Mindjoy users) or selected for your entire organization. That allows you to use Mindjoy and feel confident that your experience matches that of students.

Text moderation

How it works

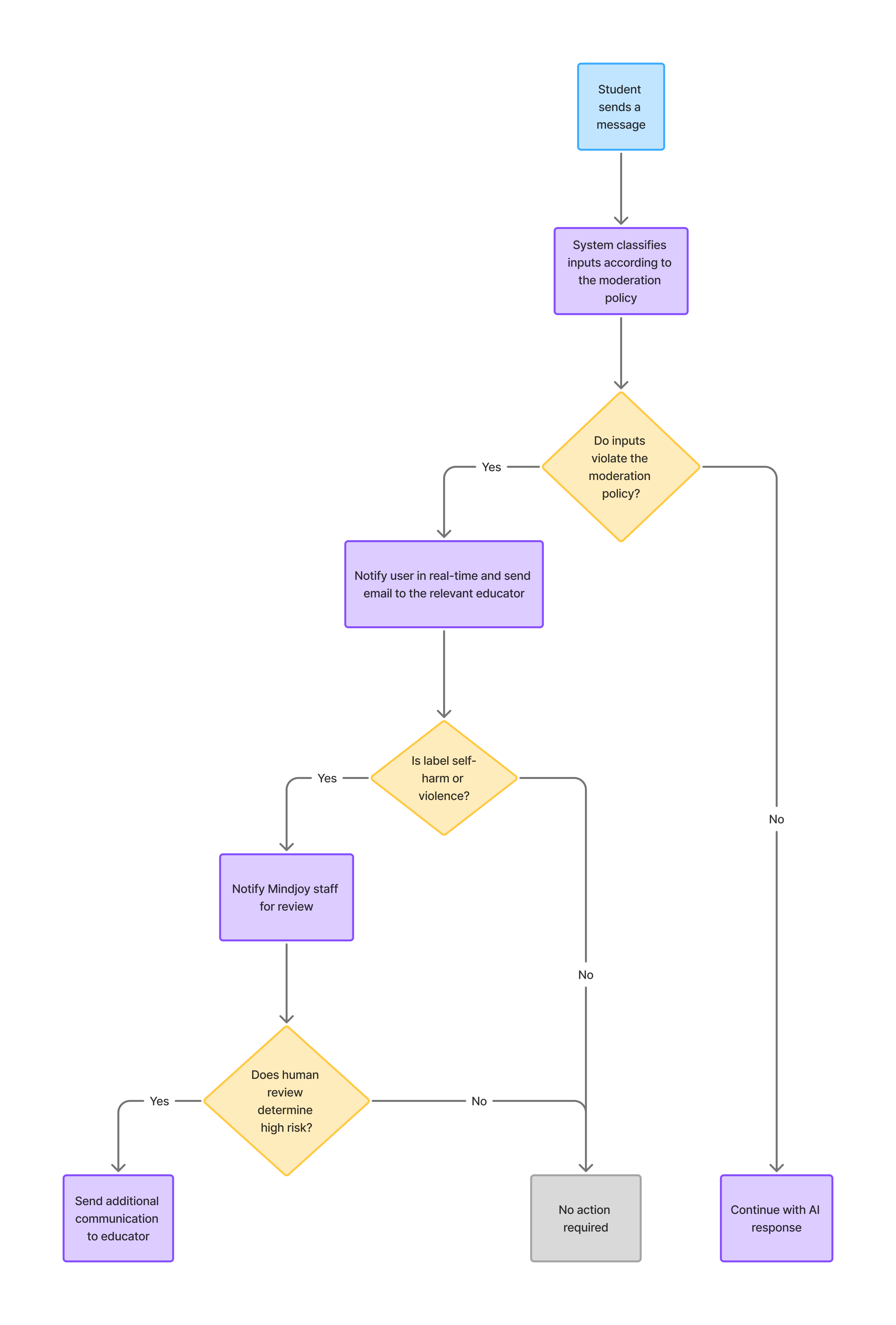

Our moderation system automatically reviews user inputs, such as text messages sent in AI chats, against clearly defined moderation policies chosen by your organization. When a user sends a message on the Mindjoy platform, it is automatically reviewed against your chosen policy. If the message violates the policy, it is flagged with a relevant category (eg. violence, profanity etc.), and the student is immediately informed why their input cannot be processed. The responsible educator is then notified of the flagged content, and for high-severity issues like self-harm or violence, Mindjoy staff are also alerted to enable quick escalation and appropriate follow-up.

The text moderation system performs the strongest in English, but can still evaluate text inputs in a large number of other languages with high accuracy.

Flow diagram for the text moderation

Policies

Every organization using Mindjoy can select from preset moderation policies – Strict, Moderate, or Lenient. This allows you to make an informed decision on the way moderation is applied to your organization. We recommend that organizations consider the age of students, the subject matter they will need to learn and any special educational needs or disabilities when choosing a policy.

Below is an overview of the available policies on Mindjoy:

| Policy | Description | Recommended audience |

|---|---|---|

| Strict | Prohibits any discussion of sexual content (including biology or health), drugs, violence, weapons, illegal activities, harassment, or profanity. Blocks even factual questions about these topics. Zero tolerance for advice or instructions related to self-harm, suicide, or violence. | Primary school students |

| Moderate | Allows educational discussion of topics like sexual health, historical violence, and mild profanity, but blocks explicit content, harmful advice, and hate speech. Still restricts graphic violence, serious illegal activity, and explicit sexual content outside of educational contexts. | High school students |

| Lenient | Allows sensitive, controversial, or mature topics if discussed in an academic, non-malicious way. Focuses on preventing severe harms: credible threats, self-harm intent, targeted harassment, illegal acts causing significant harm, and explicit pornography. Allows profanity, violent or sexual themes in educational or artistic contexts. | Students over 18 years old, students in higher education institutions |

Escalation

In cases where escalation is required, educators will receive an automated email notifying them of the moderation event and providing a link to review the student’s conversation.

For critical cases, Mindjoy staff may also reach out directly to the responsible educator.

Image moderation

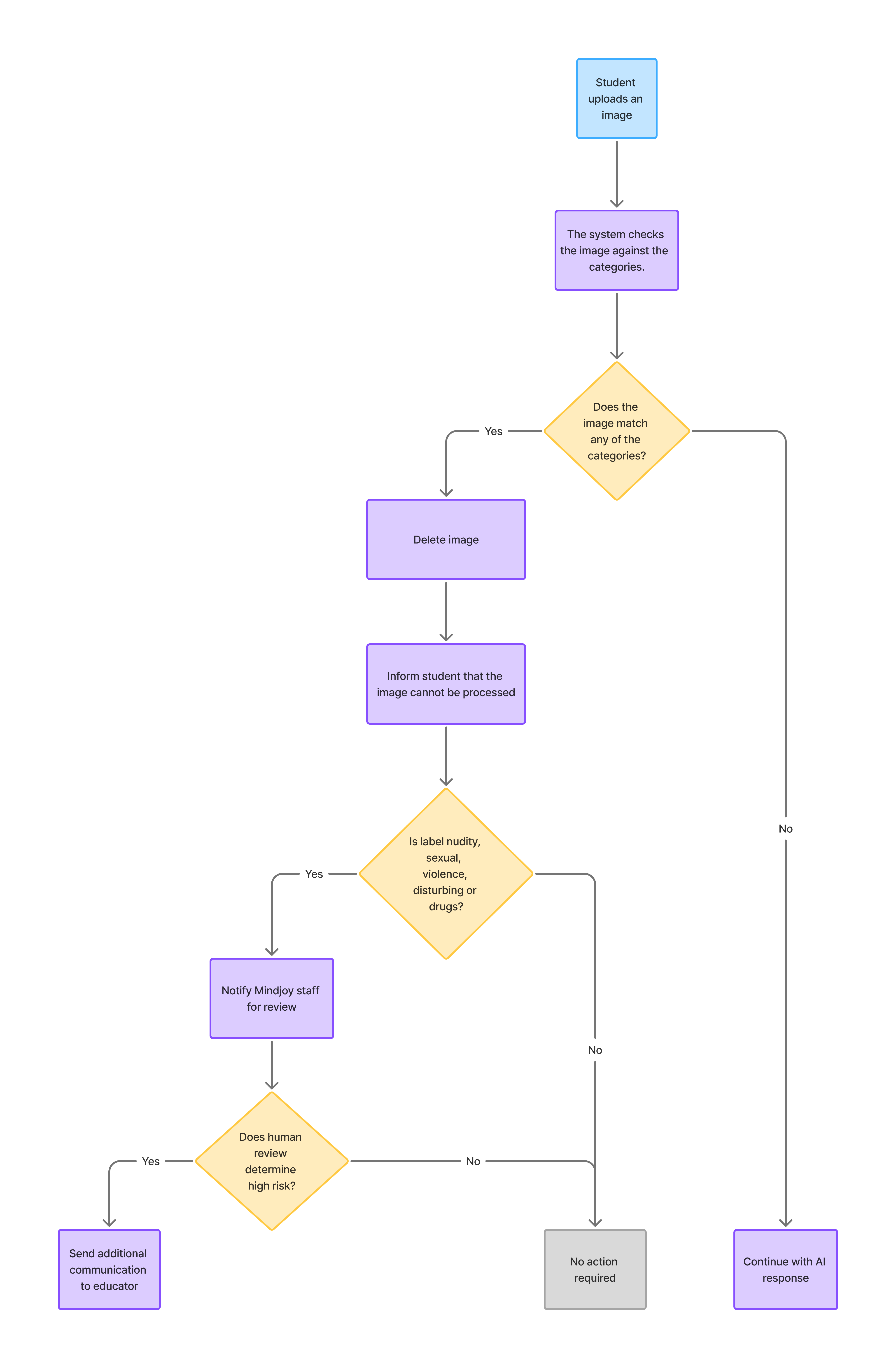

In addition to text, Mindjoy’s moderation system includes image moderation to help keep students safe from inappropriate or harmful visual content uploaded to the platform. This involves an AI system that analyzes images uploaded to the platform and classifies them into categories based on detected features within the images. Image moderation is simpler than text moderation in that the same categories apply to all users regardless of the moderation policy that’s in place.

How it works

When you upload an image, we run an automated scan for things like nudity, sexual content, violence, disturbing imagery, drugs, or signs that the subject might be a minor. If it passes, the process continues and the image can be used on the platform. If any moderation label is identified, the image is deleted immediately and the user will see a short message explaining why we can’t use it. A private, encrypted backup stays in our system for up to seven days so our team can review serious cases and, if needed, notify the relevant educator.

Below are the labels that are used to flag inappropriate content.

| Label | Description |

|---|---|

| face | Faces detected in the image (checked for minors). |

| nudity | Explicit or non-explicit nudity, underwear, swimwear. |

| sexual | Explicit sexual acts, sex toys, intimate parts. |

| violence | Graphic or realistic physical violence. |

| disturbing | Visually disturbing or graphic imagery. |

| drugs | Depictions of drugs, tobacco, or alcohol. |

| hate | Hate symbols, extremist imagery. |

| rude | Rude gestures like offensive hand signs. |

| suggestive | Suggestive or provocative non-explicit content. |

Escalation

For critical cases, Mindjoy staff may reach out directly to the responsible educator.

Audio moderation

How it works

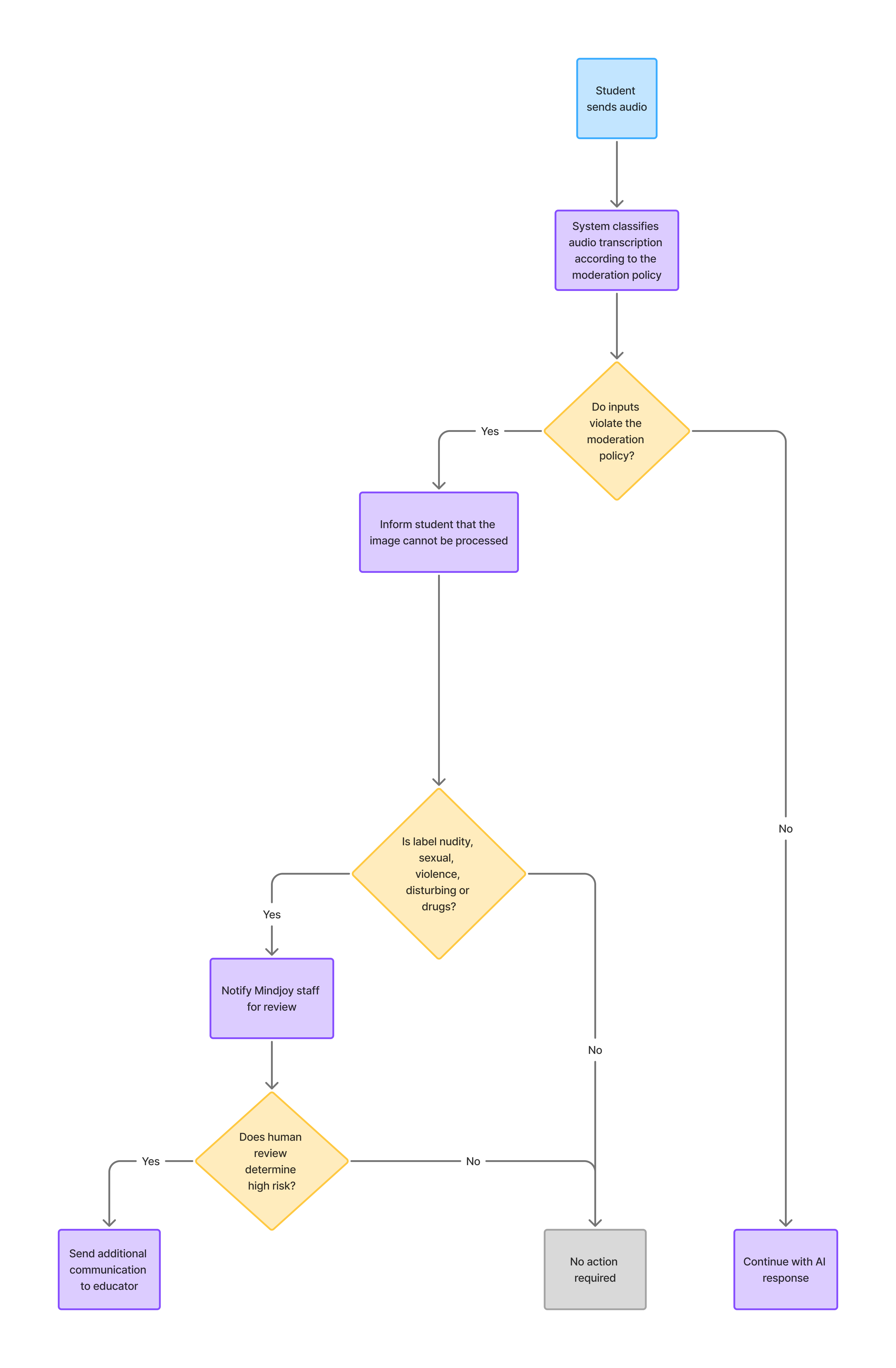

The audio moderation system is an extension of the text moderation system with an initial audio transcription step in place before evaluating the input. When a user records audio for use on the platform, the system first transcribes the audio into text. This text is then evaluated by the moderation system and your organization’s selected policy. Please see text moderation for more details on how this works.

Escalation

For critical cases, Mindjoy staff may reach out directly to the responsible educator.

Getting started

By default, all organizations have the Strict policy selected, and there’s no need to change this if it fits your needs. If you’d like to choose an alternative policy that’s better suited to the age group of your students or the subject matter they will be learning, you’ll need to select a different policy.

- Select the appropriate moderation policy for your organization. We suggest discussing this with your organization’s leadership.

- Change your organization policy by navigating to your organization settings. Note: you’ll need to have admin permissions in order to view and change this setting.

Future work

We consistently monitor our moderation system and make improvements over time. This includes adjustments to the policies that are applied and the various AI models that are used to classify user inputs.

As an organization, we also follow developments and changes in AI legislation. Specifically, we’ve aligned our systems and processes with the upcoming EU AI Act, as well the UK government’s Generative AI product safety expectations.

If you have any ideas or feedback, please share them with us at support@mindjoy.com.

In future, we’d like to:

- Provide more preset policies to choose from

- Provide better visual feedback to users

- Allow for users to select a different policy from their organization in specific, approved cases